- April 2020 (1)

- May 2019 (1)

- February 2019 (1)

- November 2018 (1)

- October 2018 (1)

- August 2018 (1)

- April 2018 (1)

- March 2018 (1)

- February 2018 (1)

- December 2016 (1)

- October 2016 (1)

- May 2016 (2)

- February 2016 (1)

- November 2015 (1)

- September 2015 (2)

- August 2015 (1)

- May 2015 (3)

- April 2015 (3)

- February 2015 (1)

- January 2015 (1)

- November 2014 (1)

- October 2014 (6)

- September 2014 (2)

- June 2014 (1)

- May 2014 (1)

- April 2014 (4)

- March 2014 (1)

- December 2013 (4)

- November 2013 (3)

- October 2013 (4)

- September 2013 (2)

- August 2013 (5)

- July 2013 (7)

- May 2013 (3)

- April 2013 (1)

- January 2013 (1)

- May 2012 (1)

- April 2012 (1)

- February 2012 (1)

- May 2011 (1)

- January 2010 (2)

- May 2007 (1)

- December 2004 (1)

- April 2003 (1)

(Note that the flicker in the video is due to the interaction of the camera shutter with the 45 projector shutters, and is not visible to users)

Projected self-levitating light field display allows users to hover in remote places

GLASGOW – This week, Human Media Lab researchers will unveil LightBee - the world’s first holographic drone display. LightBee allows people to project themselves into another location as a flying hologram using a drone that mimics their head movements in 3D - as if they were in the room.

“Virtual Reality allows avatars to appear elsewhere in 3D, but they are not physical and cannot move through the physical space” says Roel Vertegaal, Director of the Human Media Lab. “Teleconferencing robots alleviate this issue, but cannot always traverse obstacles. With LightBee, we're bringing actual holograms to physical robots that are not bound by gravity.”

Human Media Lab, Jackson Hall, Queen's, 35 Fifth Field Company Ln

3:00 / free

GEORGARAS (Kingston ON) JEREMY KERR (Kingston ON) DIANA LAWRYSHYN (Kingston ON)

Queen’s University’s Human Media Lab unveils world’s first interactive physical 3D graphics

Berlin – October 15th. The Human Media Lab at Queen’s University in Canada will be unveiling GridDrones, a new kind of computer graphics system that allows users to physically sculpt in 3D with physical pixels. Unlike in Minecraft, every 3D pixel is real, and can be touched by the user. A successor to BitDrones and the Flying LEGO exhibit – which allowed children to control a 3D swarm of drone pixels using a remote control – GridDrones allows users to physically touch each drone, dragging them out of a two-dimensional grid to create physical 3D models. 3D pixels can be given a spatial relationship using a smartphone app. This tells them how far they need to move when a neighbouring drone is pulled upwards or downwards by the user. As the user pulls and pushes pixels up and down, they can sculpt terrains, architectural models, and even physical animations. The result is one of the first functional forms of programmable matter.

Queen’s Human Media Lab unveils the world's first rollable touch-screen tablet, inspired by ancient scrolls

BARCELONA - A Queen’s University research team has taken a page from history, rolled it up and created the MagicScroll – a rollable touch-screen tablet designed to capture the seamless flexible screen real estate of ancient scrolls in a modern-day device. Led by bendable-screen pioneer Dr. Roel Vertegaal, this new technology is set to push the boundaries of flexible device technology into brand new territory.

The device is comprised of a high-resolution, 7.5” 2K resolution flexible display that can be rolled or unrolled around a central, 3D-printed cylindrical body containing the device’s computerized inner-workings. Two rotary wheels at either end of the cylinder allow the user to scroll through information on the touch screen. When a user narrows in on an interesting piece of content that they would like to examine more deeply, the display can be unrolled and function as a tablet display. Its light weight and cylindrical body makes it much easier to hold with one hand than an iPad. When rolled up, it fits your pocket and can be used as a phone, dictation device or pointing device.

New light field displays effectively simulate teleportation

MONTREAL – This week, a Queen's University researcher will unveil TeleHuman 2 - the world’s first truly holographic videoconferencing system. TeleHuman 2 allows people in different locations to appear before one another life size and in 3D - as if they were in the same room.

“People often think of holograms as the posthumous Tupac Shakur performance at Coachella 2012,” says Roel Vertegaal, Professor of Human-Computer Interaction at the Queen’s University School of Computing. “Tupac's image, however, was not a hologram but a Pepper Ghost: A two-dimensional video projected on a flat piece of glass. With TeleHuman 2, we're bringing actual holograms to life.”

Using a ring of intelligent projectors mounted above and around a retro-reflective, human-size cylindrical pod, Dr. Vertegaal’s team has been able to project humans and objects as light fields. Objects appear in 3D as if inside the pod, and can be walked around and viewed from all sides simultaneously by multiple users - much like Star Trek's famed, fictional ‘Holodeck’. Capturing the remote 3D image with an array of depth cameras, TeleHuman 2 “teleports” live, 3D images of a human from one place to another - a feat that is set to revolutionize human telepresence. Because the display projects a light field with many images – one for every degree of angle – users need not wear a headset or 3D glasses to experience each other in augmented reality.

Visitors will be able to experience and play with new technology combining LEGO® bricks and drones.

COPENHAGEN - February 15-18, children and families visiting the LEGO® World expo in Copenhagen, Denmark will have the chance to make their brick-building dreams take flight with a flock of interactive miniature drones developed by the Human Media Lab at Queen’s University in Canada in collaboration with the LEGO Group’s Creative Play Lab.

The system allows children to arrange LEGO elements into a shape of their choice and watch as a group of miniature drones takes flight to mimic the shape and colour of their creation in mid-air. With the aid of tiny sensors and gyroscopes, the system also tracks when the children move, twist and bend their designs. The drones faithfully replicate any shape alterations as an in-air animation.

Monday 12th of December, 11:00 AM @HML 3rd Floor Jackson Hall, Queen's University

Professor Morten Fjeld

Head of t2i Lab, www.t2i.se

Chalmers University of Technology, Sweden

Abstract: The talk presents three projects in the field of emerging and alternative display techniques; the two first are in the field of haptic display, the thrid is in the area of mid-air display. The OmniVib projects presents some basic studies and principles to leverage cross-body vibrotactile notifications for mobile phones. The HaptiColor project deals with a more specific challenge, but the insights are bearing for a wider range of applications; to assist the colorblind, we employed a vibration wristband that enables interpolating color information as haptic feedback. As part of a more futuristic initiative, we present a map navigation concept using a wearable mid-air display. The projects presented have been carried out in collaboration with NUS Singapore and University of Maryland (UMD), College Park.

Queen’s University’s Human Media Lab to unveil musical instrument for a flexible smartphone

KINGSTON - Researchers at the Human Media Lab at Queen’s University have developed the world’s first musical instrument for a flexible smartphone. The device, dubbed WhammyPhone, allows users to bend the display in order to create sound effects on a virtual instrument, such as a guitar or violin.

“WhammyPhone is a completely new way of interacting with sound using a smartphone. It allows for the kind of expressive input normally only seen in traditional musical instruments.” says Dr. Vertegaal.

Queen’s University’s Human Media Lab to unveil world’s first flexible lightfield-enabled smartphone.

KINGSTON - Researchers at the Human Media Lab at Queen’s University have developed the world’s first holographic flexible smartphone. The device, dubbed HoloFlex, is capable of rendering 3D images with motion parallax and stereoscopy to multiple simultaneous users without head tracking or glasses.

“HoloFlex offers a completely new way of interacting with your smartphone. It allows for glasses-free interactions with 3D video and images in a way that does not encumber the user.” says Dr. Vertegaal.

HoloFlex features a 1920x1080 full high-definition Flexible Organic Light Emitting Diode (FOLED) touchscreen display. Images are rendered into 12-pixel wide circular blocks rendering the full view of the 3D object from a particular viewpoint. These pixel blocks project through a 3D printed flexible microlens array consisting of over 16,000 fisheye lenses. The resulting 160 x 104 resolution image allows users to inspect a 3D object from any angle simply by rotating the phone.

Queen’s University’s Human Media Lab to unveil world’s first handheld with a fully cylindrical display at CHI 2016 conference in San Jose, CA.

Researchers at Queen’s University’s Human Media Lab have developed the world’s first handheld device with a fully cylindrical user interface. The device, dubbed MagicWand, has a wide range of possible applications, including use as a game controller.

Similar to the Nintendo Wii remote, but with a 340 degree cylindrical display, users are able to use physical gestures to interact with virtual 3D objects displayed on the wand. The device uses visual perspective correction to create the illusion of motion parallax; by rotating the wand users can look around the 3D object.

Queen’s University’s Human Media Lab to unveil world’s first wireless flexible smartphone; simulates feeling of navigating pages via haptic bend input

KINGSTON - Researchers at Queen’s University’s Human Media Lab have developed the world’s first full-colour, high-resolution and wireless flexible smartphone to combine multitouch with bend input. The phone, which they have named ReFlex, allows users to experience physical tactile feedback when interacting with their apps through bend gestures.

“This represents a completely new way of physical interaction with flexible smartphones” says Roel Vertegaal (School of Computing), director of the Human Media Lab at Queen’s University.

“When this smartphone is bent down on the right, pages flip through the fingers from right to left, just like they would in a book. More extreme bends speed up the page flips. Users can feel the sensation of the page moving through their fingertips via a detailed vibration of the phone. This allows eyes-free navigation, making it easier for users to keep track of where they are in a document.”

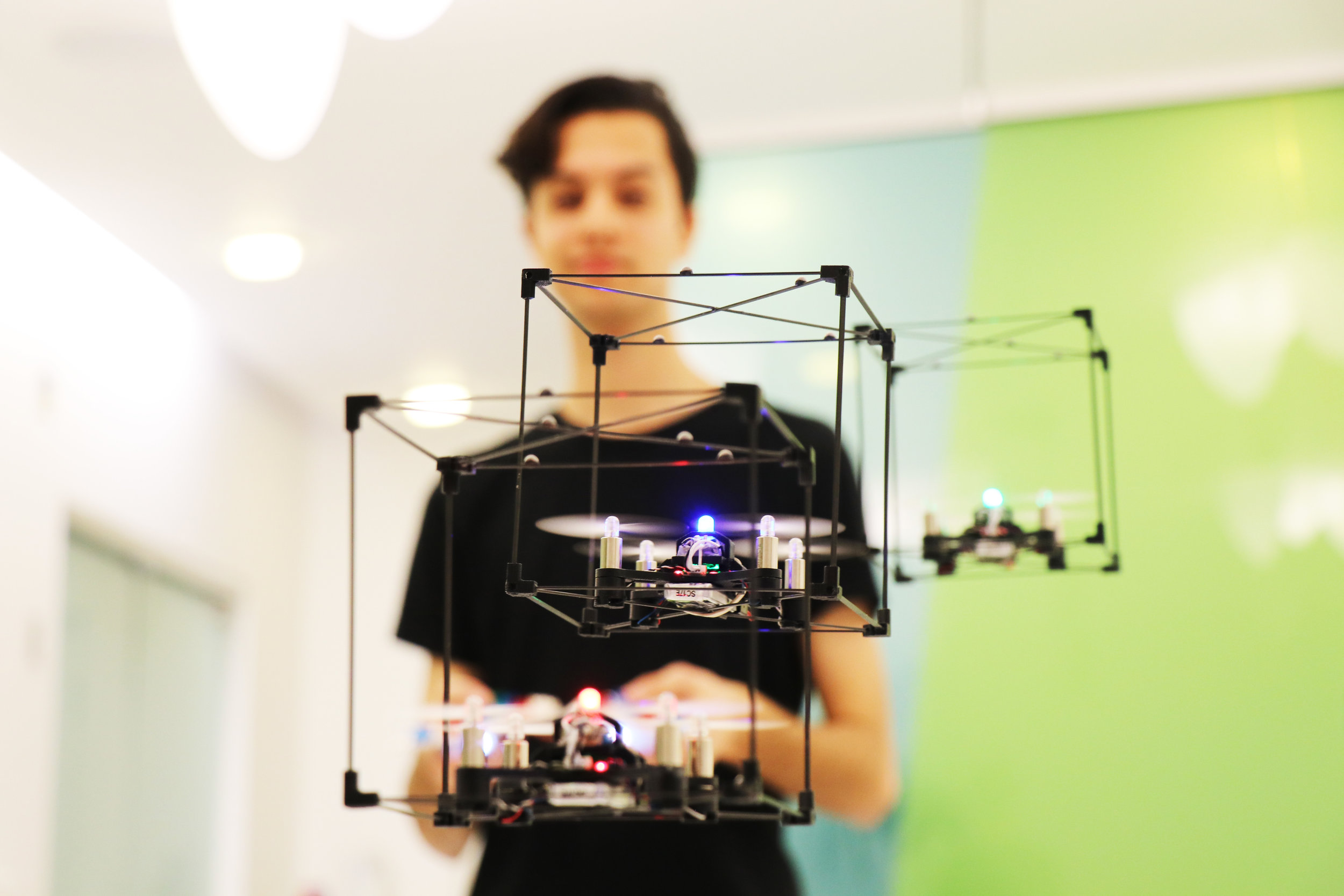

Queen’s University’s Roel Vertegaal says self-levitating displays are a breakthrough in programmable matter, allowing physical interactions with mid-air virtual objects

KINGSTON, ON – An interactive swarm of flying 3D pixels (voxels) developed at Queen’s University’s Human Media Lab is set to revolutionize the way people interact with virtual reality. The system, called BitDrones, allows users to explore virtual 3D information by interacting with physical self-levitating building blocks.

Queen’s professor Roel Vertegaal and his students are unveiling the BitDrones system on Monday, Nov. 9 at the ACM Symposium on User Interface Software and Technology in Charlotte, North Carolina. BitDrones is the first step towards creating interactive self-levitating programmable matter – materials capable of changing their 3D shape in a programmable fashion – using swarms of nano quadcopters. The work highlights many possible applications for the new technology, including real-reality 3D modeling, gaming, molecular modeling, medical imaging, robotics and online information visualization.

Queen’s University’s Human Media Lab present 3D Printed Touch and Pressure Sensors at Interact'15 Conference

Queen's professor Roel Vertegaal and students Jesse Burstyn, Nicholas Fellion, and Paul Strohmeier, introduced PrintPut, a new method for integrating simple touch and pressure sensors directly into 3D printed objects. The project was unveiled at the INTERACT 2015 conference in Bamberg, Germany: one of the largest conferences in the field of of human-computer interaction. PrintPut is a method for 3D printing that embeds interactivity directly into printed objects. When developing new artifacts, designers often create prototypes to guide their design process about how an object should look, feel, and behave. PrintPut uses conductive filament to offer an assortment of sensors that an industrial designer can easily incorporate into these 3D designs, including buttons, pressure sensors, sliders, touchpads, and flex sensors.

Queen’s University’s Human Media Lab and the Microsoft Applied Sciences Group unveil DisplayCover at MobileHCI'15

Queen’s professor Roel Vertegaal and student Antonio Gomes, in collaboration with the Applied Sciences Group at Microsoft, unveiled DisplayCover, a novel tablet cover that integrates a physical keyboard as well as a touch and stylus sensitive thin-film e-ink display. The technology was released at the ACM MobileHCI 2015 conference in Copenhagen - widely regarded as a leading conference on human-computer Interaction with mobile devices and services.

DisplayCover explores the ability to dynamically alter the peripheral display content based on usage context, while extending the user experience and interaction model to the horizontal plane, where hands naturally rest.

Everyone in tech knows the legend of Xerox PARC. In the early 1970s, members of the Palo Alto Research Center invented many of the basic building blocks of modern information technology, from bitmap graphics displays and WYSIWYG text editors to laser printers and the graphical user interface (GUI).

"Invent, don't innovate. Problem-finding beats problem-solving"

Biometric technologies are on the rise. By electronically recording data about individual’s physical attributes such as fingerprints or iris patterns, security and law enforcement services can quickly identify people with a high degree of accuracy.

The latest development in this field is the scanning of irises from a distance of up to 40 feet http://news.discovery.com/tech/gear-and-gadgets/iris-scanner-identifies-a-person-40-feet-away-150410.htm (12 metres) away. Researchers from Carnegie Mellon University in the US demonstrated they were able to use their iris recognition technology to identify drivers from an image of their eye captured from their vehicle’s side mirror.